DYNAMIC WORLDCUP OPENER - TELEMUNDO

2022

OVERVIEW

The FIFA WorldCup is the most watched sporting event in the world. It is ,with no doubt, one of the most relevant events in the world of live broadcast, with hundreds of cameras operating simultaneously and always innovating in the graphic technologies for its coverage. The event has also been part of our life growing up for some of the members of the team and we decided to honor it by creating a Broadcast cinematic opener for it.

Different to most of our projects at KeexFrame, this one was not initially commissioned by a TV network (even though NBCUniversal Telemundo ended up using it for its Qatar 2022 coverage) so we took the liberty of utilizing some new concepts and tools not commonly used in broadcast such as animated characters or cinematic storytelling which have become crucial part of our proposal with this new type of openers.

The FIFA WorldCup is the most watched sporting event in the world. It is ,with no doubt, one of the most relevant events in the world of live broadcast, with hundreds of cameras operating simultaneously and always innovating in the graphic technologies for its coverage. The event has also been part of our life growing up for some of the members of the team and we decided to honor it by creating a Broadcast cinematic opener for it.

Different to most of our projects at KeexFrame, this one was not initially commissioned by a TV network (even though NBCUniversal Telemundo ended up using it for its Qatar 2022 coverage) so we took the liberty of utilizing some new concepts and tools not commonly used in broadcast such as animated characters or cinematic storytelling which have become crucial part of our proposal with this new type of openers.

The FIFA WorldCup is the most watched sporting event in the world. It is ,with no doubt, one of the most relevant events in the world of live broadcast, with hundreds of cameras operating simultaneously and always innovating in the graphic technologies for its coverage. The event has also been part of our life growing up for some of the members of the team and we decided to honor it by creating a Broadcast cinematic opener for it.

Different to most of our projects at KeexFrame, this one was not initially commissioned by a TV network (even though NBCUniversal Telemundo ended up using it for its Qatar 2022 coverage) so we took the liberty of utilizing some new concepts and tools not commonly used in broadcast such as animated characters or cinematic storytelling which have become crucial part of our proposal with this new type of openers.

The FIFA WorldCup is the most watched sporting event in the world. It is ,with no doubt, one of the most relevant events in the world of live broadcast, with hundreds of cameras operating simultaneously and always innovating in the graphic technologies for its coverage. The event has also been part of our life growing up for some of the members of the team and we decided to honor it by creating a Broadcast cinematic opener for it.

Different to most of our projects at KeexFrame, this one was not initially commissioned by a TV network (even though NBCUniversal Telemundo ended up using it for its Qatar 2022 coverage) so we took the liberty of utilizing some new concepts and tools not commonly used in broadcast such as animated characters or cinematic storytelling which have become crucial part of our proposal with this new type of openers.

The FIFA WorldCup is the most watched sporting event in the world. It is ,with no doubt, one of the most relevant events in the world of live broadcast, with hundreds of cameras operating simultaneously and always innovating in the graphic technologies for its coverage. The event has also been part of our life growing up for some of the members of the team and we decided to honor it by creating a Broadcast cinematic opener for it.

Different to most of our projects at KeexFrame, this one was not initially commissioned by a TV network (even though NBCUniversal Telemundo ended up using it for its Qatar 2022 coverage) so we took the liberty of utilizing some new concepts and tools not commonly used in broadcast such as animated characters or cinematic storytelling which have become crucial part of our proposal with this new type of openers.

THE CONCEPT

We wanted to create something that would honor the match from the perspective of the players, their experience as modern “gladiators” confronting each other in a prestigious match, it was important for us to capture the excitement and importance of each match that a player goes through every time. We wanted to create characters that would represent “any” nationality and that could talk to any audience, that is when we came up with the idea of the “golden roman/greek gladiators”.

We wanted to create something that would honor the match from the perspective of the players, their experience as modern “gladiators” confronting each other in a prestigious match, it was important for us to capture the excitement and importance of each match that a player goes through every time. We wanted to create characters that would represent “any” nationality and that could talk to any audience, that is when we came up with the idea of the “golden roman/greek gladiators”.

We wanted to create something that would honor the match from the perspective of the players, their experience as modern “gladiators” confronting each other in a prestigious match, it was important for us to capture the excitement and importance of each match that a player goes through every time. We wanted to create characters that would represent “any” nationality and that could talk to any audience, that is when we came up with the idea of the “golden roman/greek gladiators”.

We wanted to create something that would honor the match from the perspective of the players, their experience as modern “gladiators” confronting each other in a prestigious match, it was important for us to capture the excitement and importance of each match that a player goes through every time. We wanted to create characters that would represent “any” nationality and that could talk to any audience, that is when we came up with the idea of the “golden roman/greek gladiators”.

We wanted to create something that would honor the match from the perspective of the players, their experience as modern “gladiators” confronting each other in a prestigious match, it was important for us to capture the excitement and importance of each match that a player goes through every time. We wanted to create characters that would represent “any” nationality and that could talk to any audience, that is when we came up with the idea of the “golden roman/greek gladiators”.

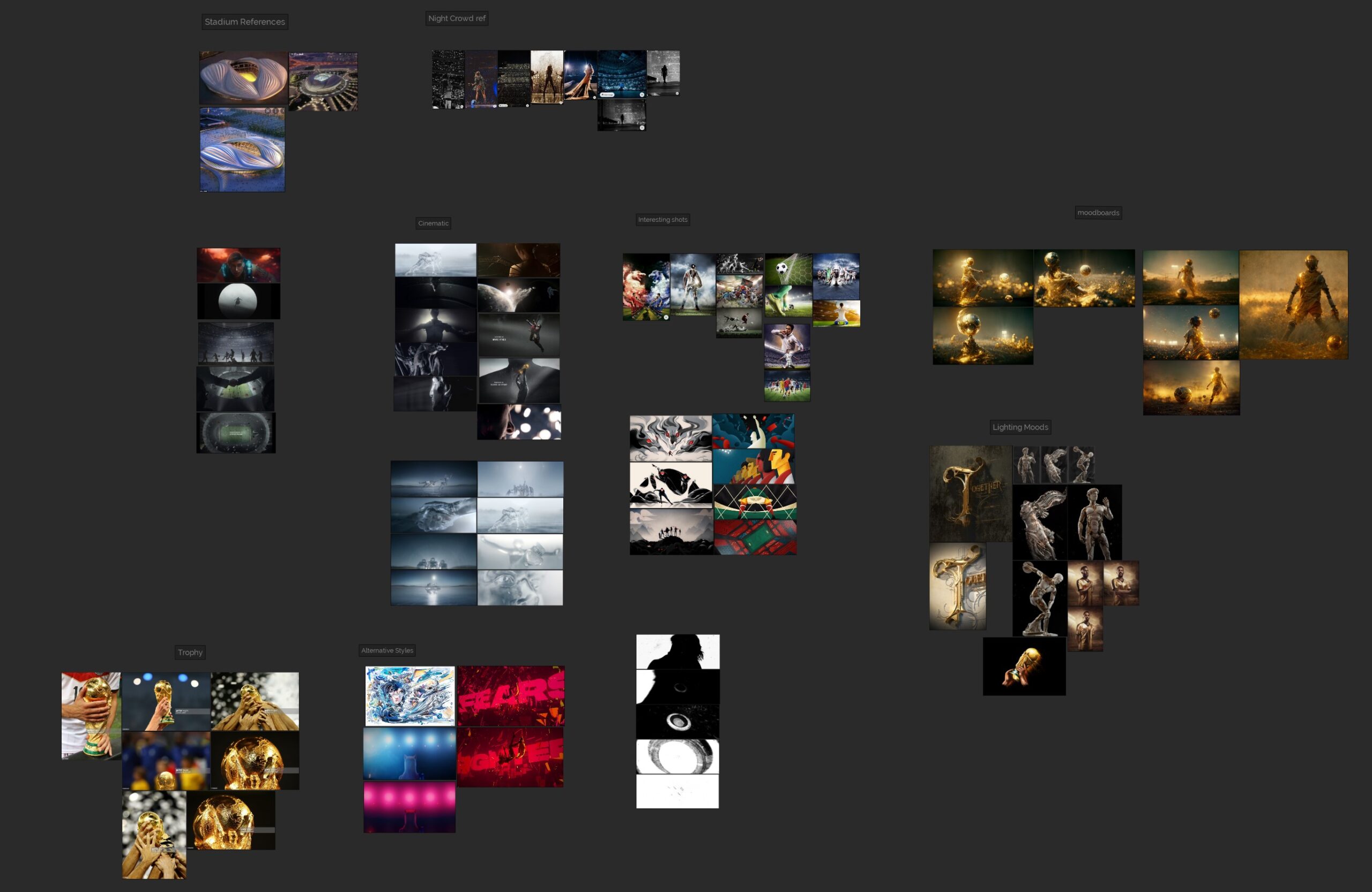

Mood-boarding and Style-framing

Once the concept was defined, our mood-boarding process consisted in a combination between research and sketching. We gathered multiple references from other animations we admire (kudos to all of them) and created several sketching iterations until we got to some rough frames that would reflect the mood we wanted to use for this piece.

Once the concept was defined, our mood-boarding process consisted in a combination between research and sketching. We gathered multiple references from other animations we admire (kudos to all of them) and created several sketching iterations until we got to some rough frames that would reflect the mood we wanted to use for this piece.

Once the concept was defined, our mood-boarding process consisted in a combination between research and sketching. We gathered multiple references from other animations we admire (kudos to all of them) and created several sketching iterations until we got to some rough frames that would reflect the mood we wanted to use for this piece.

Once the concept was defined, our mood-boarding process consisted in a combination between research and sketching. We gathered multiple references from other animations we admire (kudos to all of them) and created several sketching iterations until we got to some rough frames that would reflect the mood we wanted to use for this piece.

Once the concept was defined, our mood-boarding process consisted in a combination between research and sketching. We gathered multiple references from other animations we admire (kudos to all of them) and created several sketching iterations until we got to some rough frames that would reflect the mood we wanted to use for this piece.

We wanted to do our style-framing directly in Unreal Engine so we could define lighting and atmospherics simultaneously, again, due to the typical short production time in broadcast. So, the task became to just create some frames with the final look all out-of-engine.

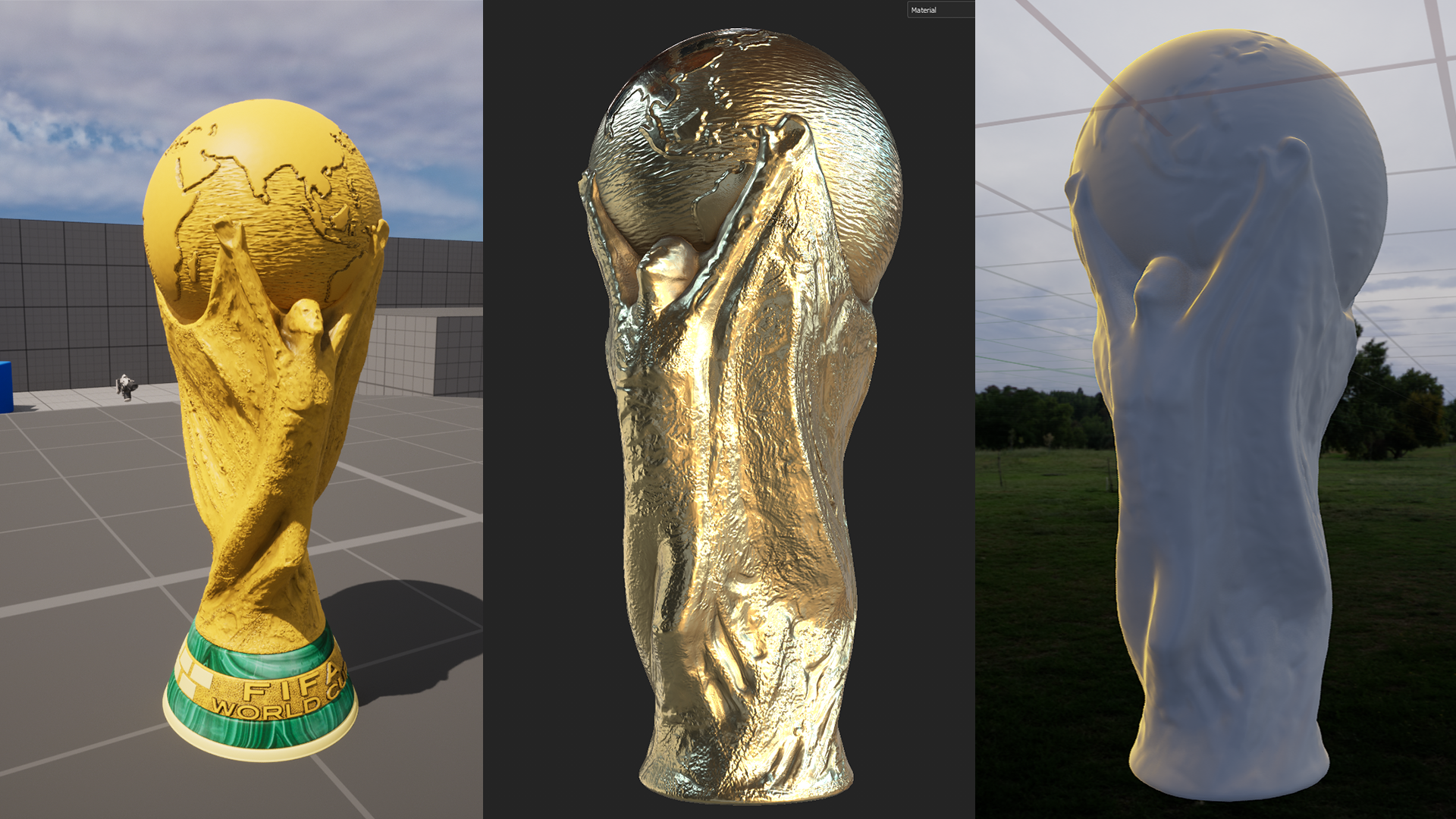

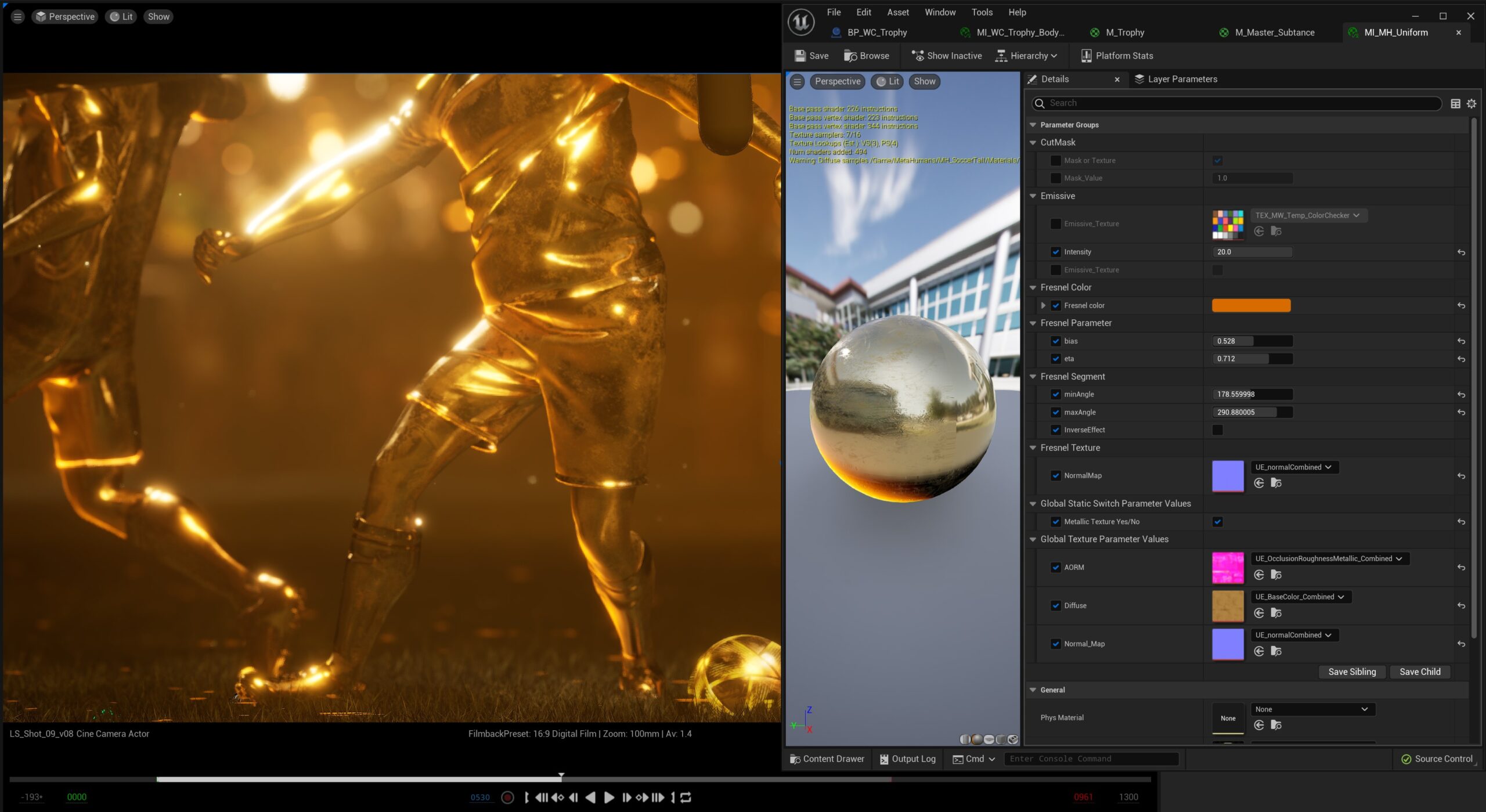

We started by working on the trophy model as we knew that it would be a key piece of the opener, we started by re-topologizing the model that we bought from a library and then, creating the PBR texturing of it from scratch using substance painter. We also created a shader that will add glow to the corners and features/details using Fresnel nodes connected to the emissive attributes of the material.

We wanted to do our style-framing directly in Unreal Engine so we could define lighting and atmospherics simultaneously, again, due to the typical short production time in broadcast. So, the task became to just create some frames with the final look all out-of-engine.

We started by working on the trophy model as we knew that it would be a key piece of the opener, we started by re-topologizing the model that we bought from a library and then, creating the PBR texturing of it from scratch using substance painter. We also created a shader that will add glow to the corners and features/details using Fresnel nodes connected to the emissive attributes of the material.

We wanted to do our style-framing directly in Unreal Engine so we could define lighting and atmospherics simultaneously, again, due to the typical short production time in broadcast. So, the task became to just create some frames with the final look all out-of-engine.

We started by working on the trophy model as we knew that it would be a key piece of the opener, we started by re-topologizing the model that we bought from a library and then, creating the PBR texturing of it from scratch using substance painter. We also created a shader that will add glow to the corners and features/details using Fresnel nodes connected to the emissive attributes of the material.

We wanted to do our style-framing directly in Unreal Engine so we could define lighting and atmospherics simultaneously, again, due to the typical short production time in broadcast. So, the task became to just create some frames with the final look all out-of-engine.

We started by working on the trophy model as we knew that it would be a key piece of the opener, we started by re-topologizing the model that we bought from a library and then, creating the PBR texturing of it from scratch using substance painter. We also created a shader that will add glow to the corners and features/details using Fresnel nodes connected to the emissive attributes of the material.

We wanted to do our style-framing directly in Unreal Engine so we could define lighting and atmospherics simultaneously, again, due to the typical short production time in broadcast. So, the task became to just create some frames with the final look all out-of-engine.

We started by working on the trophy model as we knew that it would be a key piece of the opener, we started by re-topologizing the model that we bought from a library and then, creating the PBR texturing of it from scratch using substance painter. We also created a shader that will add glow to the corners and features/details using Fresnel nodes connected to the emissive attributes of the material.

While working on the Trophy model, we were tweaking the environment and lighting, as Unreal Engine will allow us to see the results in real-time our lighting and atmospherics were being solved as we were defining the model details.

As the blocking stage was being done in parallel we were able to define some style-frames that will concept proof the look that we were aiming to achieve.

While working on the Trophy model, we were tweaking the environment and lighting, as Unreal Engine will allow us to see the results in real-time our lighting and atmospherics were being solved as we were defining the model details.

As the blocking stage was being done in parallel we were able to define some style-frames that will concept proof the look that we were aiming to achieve.

While working on the Trophy model, we were tweaking the environment and lighting, as Unreal Engine will allow us to see the results in real-time our lighting and atmospherics were being solved as we were defining the model details.

As the blocking stage was being done in parallel we were able to define some style-frames that will concept proof the look that we were aiming to achieve.

While working on the Trophy model, we were tweaking the environment and lighting, as Unreal Engine will allow us to see the results in real-time our lighting and atmospherics were being solved as we were defining the model details.

As the blocking stage was being done in parallel we were able to define some style-frames that will concept proof the look that we were aiming to achieve.

While working on the Trophy model, we were tweaking the environment and lighting, as Unreal Engine will allow us to see the results in real-time our lighting and atmospherics were being solved as we were defining the model details.

As the blocking stage was being done in parallel we were able to define some style-frames that will concept proof the look that we were aiming to achieve.

Once we achieved these styleframes using the engine and a bit of color correction in photoshop, we knew that we would be able to achieve our desired look all in-engine.

Once we achieved these styleframes using the engine and a bit of color correction in photoshop, we knew that we would be able to achieve our desired look all in-engine.

Once we achieved these styleframes using the engine and a bit of color correction in photoshop, we knew that we would be able to achieve our desired look all in-engine.

Once we achieved these styleframes using the engine and a bit of color correction in photoshop, we knew that we would be able to achieve our desired look all in-engine.

Once we achieved these styleframes using the engine and a bit of color correction in photoshop, we knew that we would be able to achieve our desired look all in-engine.

Blocking and Animation

For the blocking of the cameras, we focused primarily on the storytelling. We wanted to portray that immense feeling of a stadium full of people and the excitement the players must feel before presenting themselves to the cheering crowd. That prestige and presence of the expected “gladiators” walking into the battlefield. We started by creating an establishing shot of the stadium (we chose Al-Janoub as our sample stadium due to its unique architecture) and then the presentation of the players approaching the field. The use of Unreal Sequencer allowed us to iterate several versions very quickly and create additional camera shots as needed that helped us tell the story in the pace that felt right to the story.

We used a lot of the knowledge we learned during the Unreal Fellowship:Storytelling for this portion of the process.

For the blocking of the cameras, we focused primarily on the storytelling. We wanted to portray that immense feeling of a stadium full of people and the excitement the players must feel before presenting themselves to the cheering crowd. That prestige and presence of the expected “gladiators” walking into the battlefield. We started by creating an establishing shot of the stadium (we chose Al-Janoub as our sample stadium due to its unique architecture) and then the presentation of the players approaching the field. The use of Unreal Sequencer allowed us to iterate several versions very quickly and create additional camera shots as needed that helped us tell the story in the pace that felt right to the story.

We used a lot of the knowledge we learned during the Unreal Fellowship:Storytelling for this portion of the process.

For the blocking of the cameras, we focused primarily on the storytelling. We wanted to portray that immense feeling of a stadium full of people and the excitement the players must feel before presenting themselves to the cheering crowd. That prestige and presence of the expected “gladiators” walking into the battlefield. We started by creating an establishing shot of the stadium (we chose Al-Janoub as our sample stadium due to its unique architecture) and then the presentation of the players approaching the field. The use of Unreal Sequencer allowed us to iterate several versions very quickly and create additional camera shots as needed that helped us tell the story in the pace that felt right to the story.

We used a lot of the knowledge we learned during the Unreal Fellowship:Storytelling for this portion of the process.

For the blocking of the cameras, we focused primarily on the storytelling. We wanted to portray that immense feeling of a stadium full of people and the excitement the players must feel before presenting themselves to the cheering crowd. That prestige and presence of the expected “gladiators” walking into the battlefield. We started by creating an establishing shot of the stadium (we chose Al-Janoub as our sample stadium due to its unique architecture) and then the presentation of the players approaching the field. The use of Unreal Sequencer allowed us to iterate several versions very quickly and create additional camera shots as needed that helped us tell the story in the pace that felt right to the story.

We used a lot of the knowledge we learned during the Unreal Fellowship:Storytelling for this portion of the process.

For the blocking of the cameras, we focused primarily on the storytelling. We wanted to portray that immense feeling of a stadium full of people and the excitement the players must feel before presenting themselves to the cheering crowd. That prestige and presence of the expected “gladiators” walking into the battlefield. We started by creating an establishing shot of the stadium (we chose Al-Janoub as our sample stadium due to its unique architecture) and then the presentation of the players approaching the field. The use of Unreal Sequencer allowed us to iterate several versions very quickly and create additional camera shots as needed that helped us tell the story in the pace that felt right to the story.

We used a lot of the knowledge we learned during the Unreal Fellowship:Storytelling for this portion of the process.

Having that level of flexibility during blocking allowed us to start adding animations to the players during this early stage using the Unreal mannequins that would be then replaced by custom metahumans later in the process. This was very innovative in our workflow since we could block cameras with more accuracy since we were already integrating some “real” animation early in the process.

Having that level of flexibility during blocking allowed us to start adding animations to the players during this early stage using the Unreal mannequins that would be then replaced by custom metahumans later in the process. This was very innovative in our workflow since we could block cameras with more accuracy since we were already integrating some “real” animation early in the process.

Having that level of flexibility during blocking allowed us to start adding animations to the players during this early stage using the Unreal mannequins that would be then replaced by custom metahumans later in the process. This was very innovative in our workflow since we could block cameras with more accuracy since we were already integrating some “real” animation early in the process.

Having that level of flexibility during blocking allowed us to start adding animations to the players during this early stage using the Unreal mannequins that would be then replaced by custom metahumans later in the process. This was very innovative in our workflow since we could block cameras with more accuracy since we were already integrating some “real” animation early in the process.

Having that level of flexibility during blocking allowed us to start adding animations to the players during this early stage using the Unreal mannequins that would be then replaced by custom metahumans later in the process. This was very innovative in our workflow since we could block cameras with more accuracy since we were already integrating some “real” animation early in the process.

Players creation

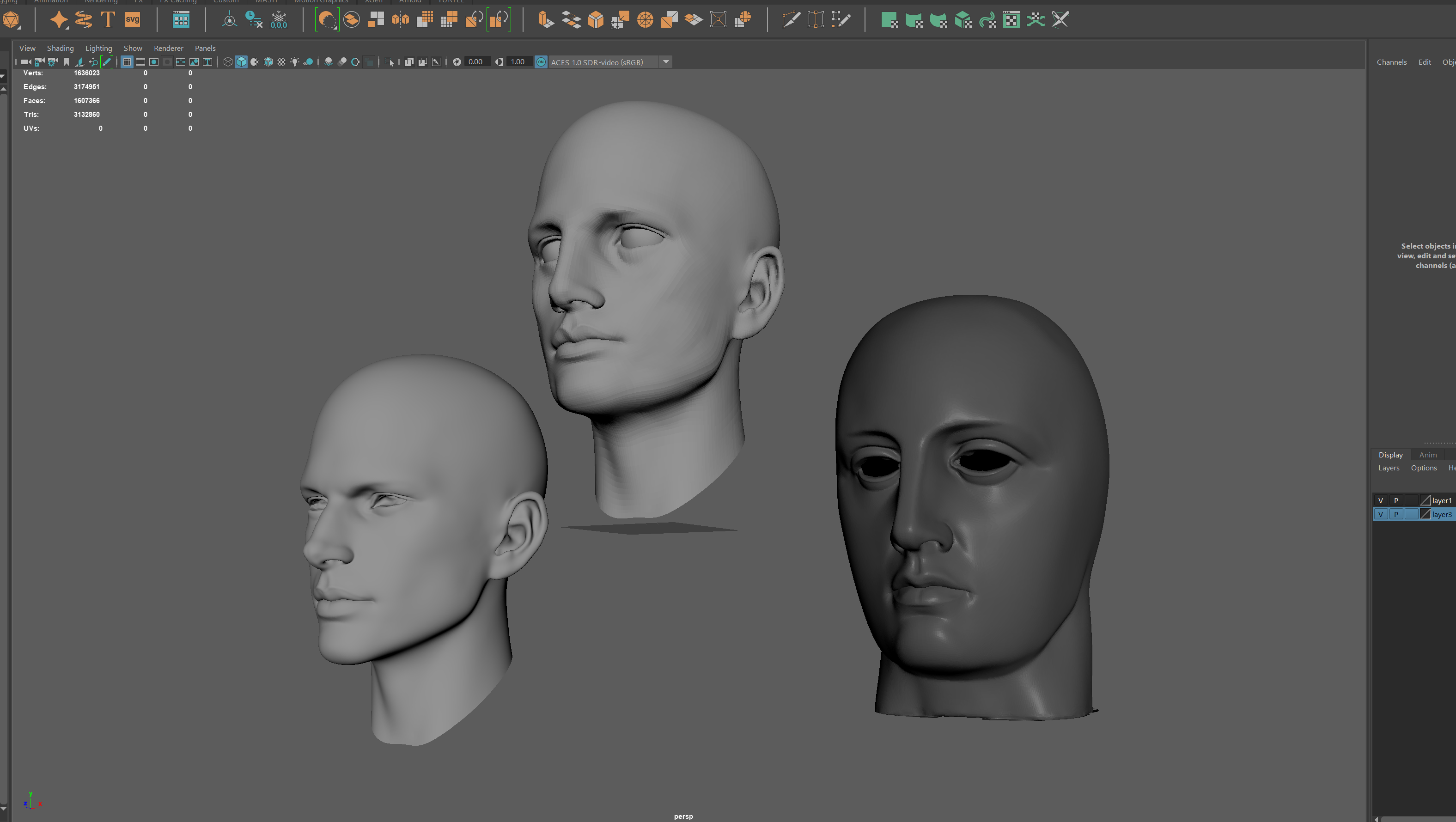

For the players we used a lot of inspiration from the Cup itself, it has an imperfect and tactile feeling in the shapes. We wanted to capture that feeling of a “hand sculpted golden greek statue”. We started by creating the model of head that will capture the features of a greek sculpture while feeling a bit more human. We took two base models and created a final head model mixing features of both of them.

For the players we used a lot of inspiration from the Cup itself, it has an imperfect and tactile feeling in the shapes. We wanted to capture that feeling of a “hand sculpted golden greek statue”. We started by creating the model of head that will capture the features of a greek sculpture while feeling a bit more human. We took two base models and created a final head model mixing features of both of them.

For the players we used a lot of inspiration from the Cup itself, it has an imperfect and tactile feeling in the shapes. We wanted to capture that feeling of a “hand sculpted golden greek statue”. We started by creating the model of head that will capture the features of a greek sculpture while feeling a bit more human. We took two base models and created a final head model mixing features of both of them.

For the players we used a lot of inspiration from the Cup itself, it has an imperfect and tactile feeling in the shapes. We wanted to capture that feeling of a “hand sculpted golden greek statue”. We started by creating the model of head that will capture the features of a greek sculpture while feeling a bit more human. We took two base models and created a final head model mixing features of both of them.

For the players we used a lot of inspiration from the Cup itself, it has an imperfect and tactile feeling in the shapes. We wanted to capture that feeling of a “hand sculpted golden greek statue”. We started by creating the model of head that will capture the features of a greek sculpture while feeling a bit more human. We took two base models and created a final head model mixing features of both of them.

Once we were happy with the shape of the head, we used the “mesh to metahuman” tool to create a metahuman out of our model.

Once we were happy with the shape of the head, we used the “mesh to metahuman” tool to create a metahuman out of our model.

Once we were happy with the shape of the head, we used the “mesh to metahuman” tool to create a metahuman out of our model.

Once we were happy with the shape of the head, we used the “mesh to metahuman” tool to create a metahuman out of our model.

Once we were happy with the shape of the head, we used the “mesh to metahuman” tool to create a metahuman out of our model.

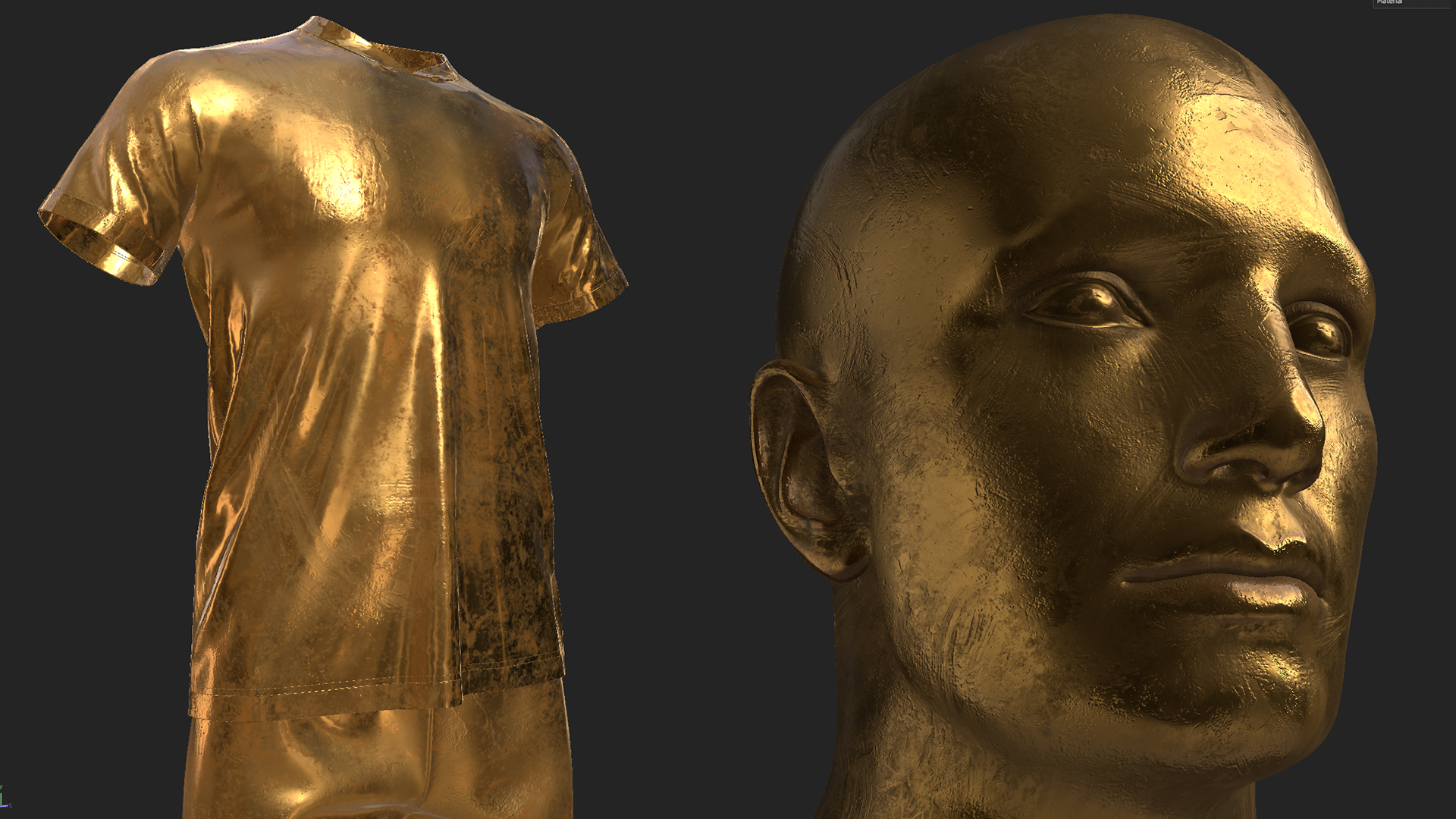

For the shading, we used adobe substance to add the details to the body and clothing, to give the feeling of hand sculpted gold.

For the shading, we used adobe substance to add the details to the body and clothing, to give the feeling of hand sculpted gold.

For the shading, we used adobe substance to add the details to the body and clothing, to give the feeling of hand sculpted gold.

For the shading, we used adobe substance to add the details to the body and clothing, to give the feeling of hand sculpted gold.

For the shading, we used adobe substance to add the details to the body and clothing, to give the feeling of hand sculpted gold.

We created a single master material for all the gold elements we added enough functionality for it to be fully art directable allowing for specific tweaks per shot depending on the story and the emphasis we wanted to give to the gold per shot.

We created a single master material for all the gold elements we added enough functionality for it to be fully art directable allowing for specific tweaks per shot depending on the story and the emphasis we wanted to give to the gold per shot.

We created a single master material for all the gold elements we added enough functionality for it to be fully art directable allowing for specific tweaks per shot depending on the story and the emphasis we wanted to give to the gold per shot.

We created a single master material for all the gold elements we added enough functionality for it to be fully art directable allowing for specific tweaks per shot depending on the story and the emphasis we wanted to give to the gold per shot.

We created a single master material for all the gold elements we added enough functionality for it to be fully art directable allowing for specific tweaks per shot depending on the story and the emphasis we wanted to give to the gold per shot.

Animation retargeting

Once the full animatic was completed and approved by our creative director, all the animation was created using the UE mannequins and then retargeted to the custom metahumans. The chain-based retargeting made it extremely efficient to retarget the animations with minimal tweaking in sequencer per character.

Once the full animatic was completed and approved by our creative director, all the animation was created using the UE mannequins and then retargeted to the custom metahumans. The chain-based retargeting made it extremely efficient to retarget the animations with minimal tweaking in sequencer per character.

Once the full animatic was completed and approved by our creative director, all the animation was created using the UE mannequins and then retargeted to the custom metahumans. The chain-based retargeting made it extremely efficient to retarget the animations with minimal tweaking in sequencer per character.

Once the full animatic was completed and approved by our creative director, all the animation was created using the UE mannequins and then retargeted to the custom metahumans. The chain-based retargeting made it extremely efficient to retarget the animations with minimal tweaking in sequencer per character.

Once the full animatic was completed and approved by our creative director, all the animation was created using the UE mannequins and then retargeted to the custom metahumans. The chain-based retargeting made it extremely efficient to retarget the animations with minimal tweaking in sequencer per character.

The metahumans control rig (FK and IK) allowed us to tweak the animations easily after retargeting. We were able to generate multiple iterations of the motion with different levels of strength, intention and timing to give the characters the right personality.

We were also able to show them immediately in context of the opener with the right shading and lighting in real-time for approval.

We utilized the modular characters approach to modify the metahumans and add soccer shoes and socks, we will have the same skeleton driving all the different skeletal meshes to complete the model.

The metahumans control rig (FK and IK) allowed us to tweak the animations easily after retargeting. We were able to generate multiple iterations of the motion with different levels of strength, intention and timing to give the characters the right personality.

We were also able to show them immediately in context of the opener with the right shading and lighting in real-time for approval.

We utilized the modular characters approach to modify the metahumans and add soccer shoes and socks, we will have the same skeleton driving all the different skeletal meshes to complete the model.

The metahumans control rig (FK and IK) allowed us to tweak the animations easily after retargeting. We were able to generate multiple iterations of the motion with different levels of strength, intention and timing to give the characters the right personality.

We were also able to show them immediately in context of the opener with the right shading and lighting in real-time for approval.

We utilized the modular characters approach to modify the metahumans and add soccer shoes and socks, we will have the same skeleton driving all the different skeletal meshes to complete the model.

The metahumans control rig (FK and IK) allowed us to tweak the animations easily after retargeting. We were able to generate multiple iterations of the motion with different levels of strength, intention and timing to give the characters the right personality.

We were also able to show them immediately in context of the opener with the right shading and lighting in real-time for approval.

We utilized the modular characters approach to modify the metahumans and add soccer shoes and socks, we will have the same skeleton driving all the different skeletal meshes to complete the model.

The metahumans control rig (FK and IK) allowed us to tweak the animations easily after retargeting. We were able to generate multiple iterations of the motion with different levels of strength, intention and timing to give the characters the right personality.

We were also able to show them immediately in context of the opener with the right shading and lighting in real-time for approval.

We utilized the modular characters approach to modify the metahumans and add soccer shoes and socks, we will have the same skeleton driving all the different skeletal meshes to complete the model.

Cloth simulation

For the cloth simulation of the flags, we used Maya and imported the result as an alembic cache into Unreal Engine. Sequencer allowed us to re-time the cache to achieve a variable timing that gave a lot of dramatism to the flags shots in the opener.

For the cloth simulation of the flags, we used Maya and imported the result as an alembic cache into Unreal Engine. Sequencer allowed us to re-time the cache to achieve a variable timing that gave a lot of dramatism to the flags shots in the opener.

For the cloth simulation of the flags, we used Maya and imported the result as an alembic cache into Unreal Engine. Sequencer allowed us to re-time the cache to achieve a variable timing that gave a lot of dramatism to the flags shots in the opener.

For the cloth simulation of the flags, we used Maya and imported the result as an alembic cache into Unreal Engine. Sequencer allowed us to re-time the cache to achieve a variable timing that gave a lot of dramatism to the flags shots in the opener.

For the cloth simulation of the flags, we used Maya and imported the result as an alembic cache into Unreal Engine. Sequencer allowed us to re-time the cache to achieve a variable timing that gave a lot of dramatism to the flags shots in the opener.

It was important for us that the clothing of the players felt realistic to the motion, we wanted to accentuate the dramatic nature of the motion with the proper cloth folding. We decided to use Marvelous designer to create custom shirts and shorts for them players, we achieved this by exporting the metahuman base character to Marvelous Designer to build the clothing custom fit.

Once the clothing was created, we exported the independent animation clips per shot as an FBX model, then we imported them into Marvelous Designer to simulate the cloth and then re-export as an alembic cache to be used in Unreal engine.

Even though the alembic method was very useful for us, we are currently in the process of migrating the clothing to native Unreal engine clothing.

It was important for us that the clothing of the players felt realistic to the motion, we wanted to accentuate the dramatic nature of the motion with the proper cloth folding. We decided to use Marvelous designer to create custom shirts and shorts for them players, we achieved this by exporting the metahuman base character to Marvelous Designer to build the clothing custom fit.

Once the clothing was created, we exported the independent animation clips per shot as an FBX model, then we imported them into Marvelous Designer to simulate the cloth and then re-export as an alembic cache to be used in Unreal engine.

Even though the alembic method was very useful for us, we are currently in the process of migrating the clothing to native Unreal engine clothing.

It was important for us that the clothing of the players felt realistic to the motion, we wanted to accentuate the dramatic nature of the motion with the proper cloth folding. We decided to use Marvelous designer to create custom shirts and shorts for them players, we achieved this by exporting the metahuman base character to Marvelous Designer to build the clothing custom fit.

Once the clothing was created, we exported the independent animation clips per shot as an FBX model, then we imported them into Marvelous Designer to simulate the cloth and then re-export as an alembic cache to be used in Unreal engine.

Even though the alembic method was very useful for us, we are currently in the process of migrating the clothing to native Unreal engine clothing.

It was important for us that the clothing of the players felt realistic to the motion, we wanted to accentuate the dramatic nature of the motion with the proper cloth folding. We decided to use Marvelous designer to create custom shirts and shorts for them players, we achieved this by exporting the metahuman base character to Marvelous Designer to build the clothing custom fit.

Once the clothing was created, we exported the independent animation clips per shot as an FBX model, then we imported them into Marvelous Designer to simulate the cloth and then re-export as an alembic cache to be used in Unreal engine.

Even though the alembic method was very useful for us, we are currently in the process of migrating the clothing to native Unreal engine clothing.

It was important for us that the clothing of the players felt realistic to the motion, we wanted to accentuate the dramatic nature of the motion with the proper cloth folding. We decided to use Marvelous designer to create custom shirts and shorts for them players, we achieved this by exporting the metahuman base character to Marvelous Designer to build the clothing custom fit.

Once the clothing was created, we exported the independent animation clips per shot as an FBX model, then we imported them into Marvelous Designer to simulate the cloth and then re-export as an alembic cache to be used in Unreal engine.

Even though the alembic method was very useful for us, we are currently in the process of migrating the clothing to native Unreal engine clothing.

We then combined the skeletal meshes with the alembic caches to complete the model of the player.

We then combined the skeletal meshes with the alembic caches to complete the model of the player.

We then combined the skeletal meshes with the alembic caches to complete the model of the player.

We then combined the skeletal meshes with the alembic caches to complete the model of the player.

We then combined the skeletal meshes with the alembic caches to complete the model of the player.

Lighting and rendering

We strongly believe that lighting can break or make a scene. Thinking in a smart and creative way for our lightning composition. Our main concept was a battle, we needed a lighting style and atmosphere that would support that. We took advantage of the revolutionary and powerful new lighting solution of the Unreal Engine 5.

Having "Lumen Global Illumination" in conjunction with " Hardware RTX Reflections & Shadows System" was literally a game changer for the progress and development of our World Cup Opener. "Lumen Global Illumination" helped us achieve that realistic mood, it was the perfect base illumination that would allow us to concentrate just on detailed lighting.

We strongly believe that lighting can break or make a scene. Thinking in a smart and creative way for our lightning composition. Our main concept was a battle, we needed a lighting style and atmosphere that would support that. We took advantage of the revolutionary and powerful new lighting solution of the Unreal Engine 5.

Having "Lumen Global Illumination" in conjunction with " Hardware RTX Reflections & Shadows System" was literally a game changer for the progress and development of our World Cup Opener. "Lumen Global Illumination" helped us achieve that realistic mood, it was the perfect base illumination that would allow us to concentrate just on detailed lighting.

We strongly believe that lighting can break or make a scene. Thinking in a smart and creative way for our lightning composition. Our main concept was a battle, we needed a lighting style and atmosphere that would support that. We took advantage of the revolutionary and powerful new lighting solution of the Unreal Engine 5.

Having "Lumen Global Illumination" in conjunction with " Hardware RTX Reflections & Shadows System" was literally a game changer for the progress and development of our World Cup Opener. "Lumen Global Illumination" helped us achieve that realistic mood, it was the perfect base illumination that would allow us to concentrate just on detailed lighting.

We strongly believe that lighting can break or make a scene. Thinking in a smart and creative way for our lightning composition. Our main concept was a battle, we needed a lighting style and atmosphere that would support that. We took advantage of the revolutionary and powerful new lighting solution of the Unreal Engine 5.

Having "Lumen Global Illumination" in conjunction with " Hardware RTX Reflections & Shadows System" was literally a game changer for the progress and development of our World Cup Opener. "Lumen Global Illumination" helped us achieve that realistic mood, it was the perfect base illumination that would allow us to concentrate just on detailed lighting.

We strongly believe that lighting can break or make a scene. Thinking in a smart and creative way for our lightning composition. Our main concept was a battle, we needed a lighting style and atmosphere that would support that. We took advantage of the revolutionary and powerful new lighting solution of the Unreal Engine 5.

Having "Lumen Global Illumination" in conjunction with " Hardware RTX Reflections & Shadows System" was literally a game changer for the progress and development of our World Cup Opener. "Lumen Global Illumination" helped us achieve that realistic mood, it was the perfect base illumination that would allow us to concentrate just on detailed lighting.

We combined a series of light source types like Directional, rectangle, and spotlights. The fog and volumetric effect was handled by the "Exponential Height Fog" & the "Sky Atmosphere" effects.

Every light source had a specific volumetric influence value so we could simulate those typical stadium lighting.

We combined a series of light source types like Directional, rectangle, and spotlights. The fog and volumetric effect was handled by the "Exponential Height Fog" & the "Sky Atmosphere" effects.

Every light source had a specific volumetric influence value so we could simulate those typical stadium lighting.

We combined a series of light source types like Directional, rectangle, and spotlights. The fog and volumetric effect was handled by the "Exponential Height Fog" & the "Sky Atmosphere" effects.

Every light source had a specific volumetric influence value so we could simulate those typical stadium lighting.

We combined a series of light source types like Directional, rectangle, and spotlights. The fog and volumetric effect was handled by the "Exponential Height Fog" & the "Sky Atmosphere" effects.

Every light source had a specific volumetric influence value so we could simulate those typical stadium lighting.

We combined a series of light source types like Directional, rectangle, and spotlights. The fog and volumetric effect was handled by the "Exponential Height Fog" & the "Sky Atmosphere" effects.

Every light source had a specific volumetric influence value so we could simulate those typical stadium lighting.

The heaviest weight in terms of the stylish look was handled by the "Hardware RTX Reflections solution". All those beautiful and shiny golden tones definitely marked a precedent in our production and style. Even though it is quite performance costly, hardware RTX Reflections is one of our preferred solutions due to its precision. We balanced its performance versus quality with "Console Variables" (CVars) for the final high quality render settings.

We used the super powerful "Post Process Volume" effect to enhance the glows and lens flares in every light source.

The heaviest weight in terms of the stylish look was handled by the "Hardware RTX Reflections solution". All those beautiful and shiny golden tones definitely marked a precedent in our production and style. Even though it is quite performance costly, hardware RTX Reflections is one of our preferred solutions due to its precision. We balanced its performance versus quality with "Console Variables" (CVars) for the final high quality render settings.

We used the super powerful "Post Process Volume" effect to enhance the glows and lens flares in every light source.

The heaviest weight in terms of the stylish look was handled by the "Hardware RTX Reflections solution". All those beautiful and shiny golden tones definitely marked a precedent in our production and style. Even though it is quite performance costly, hardware RTX Reflections is one of our preferred solutions due to its precision. We balanced its performance versus quality with "Console Variables" (CVars) for the final high quality render settings.

We used the super powerful "Post Process Volume" effect to enhance the glows and lens flares in every light source.

The heaviest weight in terms of the stylish look was handled by the "Hardware RTX Reflections solution". All those beautiful and shiny golden tones definitely marked a precedent in our production and style. Even though it is quite performance costly, hardware RTX Reflections is one of our preferred solutions due to its precision. We balanced its performance versus quality with "Console Variables" (CVars) for the final high quality render settings.

We used the super powerful "Post Process Volume" effect to enhance the glows and lens flares in every light source.

The heaviest weight in terms of the stylish look was handled by the "Hardware RTX Reflections solution". All those beautiful and shiny golden tones definitely marked a precedent in our production and style. Even though it is quite performance costly, hardware RTX Reflections is one of our preferred solutions due to its precision. We balanced its performance versus quality with "Console Variables" (CVars) for the final high quality render settings.

We used the super powerful "Post Process Volume" effect to enhance the glows and lens flares in every light source.

Interactivity and production

One of the main goals of this project was to create an opener that while having a cinematic look, could maintain the editable capabilities for live production. This would allow the network graphic department to adjust the teams last minute and have multiple versions of the opener on demand.

For that, we created a system in which, using a single control blueprint, all the visual elements for each team could be modified from a dropdown Menu.

One of the main goals of this project was to create an opener that while having a cinematic look, could maintain the editable capabilities for live production. This would allow the network graphic department to adjust the teams last minute and have multiple versions of the opener on demand.

For that, we created a system in which, using a single control blueprint, all the visual elements for each team could be modified from a dropdown Menu.

One of the main goals of this project was to create an opener that while having a cinematic look, could maintain the editable capabilities for live production. This would allow the network graphic department to adjust the teams last minute and have multiple versions of the opener on demand.

For that, we created a system in which, using a single control blueprint, all the visual elements for each team could be modified from a dropdown Menu.

One of the main goals of this project was to create an opener that while having a cinematic look, could maintain the editable capabilities for live production. This would allow the network graphic department to adjust the teams last minute and have multiple versions of the opener on demand.

For that, we created a system in which, using a single control blueprint, all the visual elements for each team could be modified from a dropdown Menu.

One of the main goals of this project was to create an opener that while having a cinematic look, could maintain the editable capabilities for live production. This would allow the network graphic department to adjust the teams last minute and have multiple versions of the opener on demand.

For that, we created a system in which, using a single control blueprint, all the visual elements for each team could be modified from a dropdown Menu.

We wanted to make the operation as simple as just choosing each team and then sending them to render (or even playing live if needed). We created a blueprint that would read from a data table all the values to modify such as textures and colors for each team. We also wanted to give the network the possibility of having a producer without any Unreal Engine or even graphics operation knowledge the ability to modify the opener from anywhere inside the network.

For that we used the remote control plugin for Unreal Engine, this allowed us to create a web-based controller to modify the teams in the opener and even send them to render if needed.

We wanted to make the operation as simple as just choosing each team and then sending them to render (or even playing live if needed). We created a blueprint that would read from a data table all the values to modify such as textures and colors for each team. We also wanted to give the network the possibility of having a producer without any Unreal Engine or even graphics operation knowledge the ability to modify the opener from anywhere inside the network.

For that we used the remote control plugin for Unreal Engine, this allowed us to create a web-based controller to modify the teams in the opener and even send them to render if needed.

We wanted to make the operation as simple as just choosing each team and then sending them to render (or even playing live if needed). We created a blueprint that would read from a data table all the values to modify such as textures and colors for each team. We also wanted to give the network the possibility of having a producer without any Unreal Engine or even graphics operation knowledge the ability to modify the opener from anywhere inside the network.

For that we used the remote control plugin for Unreal Engine, this allowed us to create a web-based controller to modify the teams in the opener and even send them to render if needed.

We wanted to make the operation as simple as just choosing each team and then sending them to render (or even playing live if needed). We created a blueprint that would read from a data table all the values to modify such as textures and colors for each team. We also wanted to give the network the possibility of having a producer without any Unreal Engine or even graphics operation knowledge the ability to modify the opener from anywhere inside the network.

For that we used the remote control plugin for Unreal Engine, this allowed us to create a web-based controller to modify the teams in the opener and even send them to render if needed.

We wanted to make the operation as simple as just choosing each team and then sending them to render (or even playing live if needed). We created a blueprint that would read from a data table all the values to modify such as textures and colors for each team. We also wanted to give the network the possibility of having a producer without any Unreal Engine or even graphics operation knowledge the ability to modify the opener from anywhere inside the network.

For that we used the remote control plugin for Unreal Engine, this allowed us to create a web-based controller to modify the teams in the opener and even send them to render if needed.

The remote control plugin is a must-have tool for the live production environment in which the editorial changes are unpredictable such as news or sports. It allows the editorial control to reside on the production crew and not on the graphics team improving efficiency during a live production environment.

This tool became crucial during NBCUniversal Telemundo coverage for Qatar 2022 in which the teams had to be picked some times a few hours before the live transmission of the matches.

The remote control plugin is a must-have tool for the live production environment in which the editorial changes are unpredictable such as news or sports. It allows the editorial control to reside on the production crew and not on the graphics team improving efficiency during a live production environment.

This tool became crucial during NBCUniversal Telemundo coverage for Qatar 2022 in which the teams had to be picked some times a few hours before the live transmission of the matches.

The remote control plugin is a must-have tool for the live production environment in which the editorial changes are unpredictable such as news or sports. It allows the editorial control to reside on the production crew and not on the graphics team improving efficiency during a live production environment.

This tool became crucial during NBCUniversal Telemundo coverage for Qatar 2022 in which the teams had to be picked some times a few hours before the live transmission of the matches.

The remote control plugin is a must-have tool for the live production environment in which the editorial changes are unpredictable such as news or sports. It allows the editorial control to reside on the production crew and not on the graphics team improving efficiency during a live production environment.

This tool became crucial during NBCUniversal Telemundo coverage for Qatar 2022 in which the teams had to be picked some times a few hours before the live transmission of the matches.

The remote control plugin is a must-have tool for the live production environment in which the editorial changes are unpredictable such as news or sports. It allows the editorial control to reside on the production crew and not on the graphics team improving efficiency during a live production environment.

This tool became crucial during NBCUniversal Telemundo coverage for Qatar 2022 in which the teams had to be picked some times a few hours before the live transmission of the matches.

Music

All the music was created originally for this piece in collaboration with Masterheadlab which is a long term collaborator of KeexFrame, we wanted to capture the essence of Qatar while maintaining an orchestral approach. We introduced some middle eastern drums and accents to evoque that feeling.

All the music was created originally for this piece in collaboration with Masterheadlab which is a long term collaborator of KeexFrame, we wanted to capture the essence of Qatar while maintaining an orchestral approach. We introduced some middle eastern drums and accents to evoque that feeling.

All the music was created originally for this piece in collaboration with Masterheadlab which is a long term collaborator of KeexFrame, we wanted to capture the essence of Qatar while maintaining an orchestral approach. We introduced some middle eastern drums and accents to evoque that feeling.

All the music was created originally for this piece in collaboration with Masterheadlab which is a long term collaborator of KeexFrame, we wanted to capture the essence of Qatar while maintaining an orchestral approach. We introduced some middle eastern drums and accents to evoque that feeling.

All the music was created originally for this piece in collaboration with Masterheadlab which is a long term collaborator of KeexFrame, we wanted to capture the essence of Qatar while maintaining an orchestral approach. We introduced some middle eastern drums and accents to evoque that feeling.

Conclusion

Creating this project made us understand the importance and potential of broadcast cinematics in this new era of broadcast graphics we are facing. Real-time rendering is enabling graphic capabilities never witnessed before in a live production environment.

This type of projects is becoming a key part of our future strategy for high-end broadcast graphics creation particularly due to its emphasis on storytelling, we are truly excited for what is coming.

Creating this project made us understand the importance and potential of broadcast cinematics in this new era of broadcast graphics we are facing. Real-time rendering is enabling graphic capabilities never witnessed before in a live production environment.

This type of projects is becoming a key part of our future strategy for high-end broadcast graphics creation particularly due to its emphasis on storytelling, we are truly excited for what is coming.

Creating this project made us understand the importance and potential of broadcast cinematics in this new era of broadcast graphics we are facing. Real-time rendering is enabling graphic capabilities never witnessed before in a live production environment.

This type of projects is becoming a key part of our future strategy for high-end broadcast graphics creation particularly due to its emphasis on storytelling, we are truly excited for what is coming.

Creating this project made us understand the importance and potential of broadcast cinematics in this new era of broadcast graphics we are facing. Real-time rendering is enabling graphic capabilities never witnessed before in a live production environment.

This type of projects is becoming a key part of our future strategy for high-end broadcast graphics creation particularly due to its emphasis on storytelling, we are truly excited for what is coming.

Creating this project made us understand the importance and potential of broadcast cinematics in this new era of broadcast graphics we are facing. Real-time rendering is enabling graphic capabilities never witnessed before in a live production environment.

This type of projects is becoming a key part of our future strategy for high-end broadcast graphics creation particularly due to its emphasis on storytelling, we are truly excited for what is coming.